TechUnder5 — where tech meets simplicity in under 5 minutes.

In this post, we’ll break down one of the most talked-about ideas in AI: Generative AI. It powers chatbots, image makers, and code assistants by predicting what comes next—turning patterns in data into useful drafts of text, images, and more.

Table of Contents

- What is Generative AI?

- Acronyms, expanded (and what they mean)

- How GenAI works (in plain English)

- An analogy to make it stick: prediction, not creation

- Popular Use Cases (and how they’re evolving)

- Strengths and Limits

- The Takeaway

What is Generative AI?

Generative AI (GenAI) is a branch of artificial intelligence that learns patterns from large datasets and then generates new content—text, images, code, audio, even video—by predicting what should come next. It does not “create” in a human sense; it samples the most likely next pieces (tokens/pixels/notes) given what it has already seen.In that sense it is actually predictive.

GenAI is advanced autocomplete across many media types.Popular GenAI tools you’ve likely used or seen include ChatGPT and Claude (text/coding), Gemini and Copilot (assistants/productivity), Midjourney, DALL·E, and Stable Diffusion (images), and Runway (video).

Acronyms, expanded (and what they mean)

-

AI(Artificial Intelligence): Broad field focused on building systems that perform tasks requiring human-like intelligence.

-

ML(Machine Learning): Subfield of AI where models learn patterns from data to make predictions or decisions.

-

NN(Neural Network): A type of ML model (layers of interconnected “neurons”) that learns complex patterns; today’s GenAI typically uses transformer neural networks.

-

NLP(Natural Language Processing): Methods for computers to understand and generate human language.

-

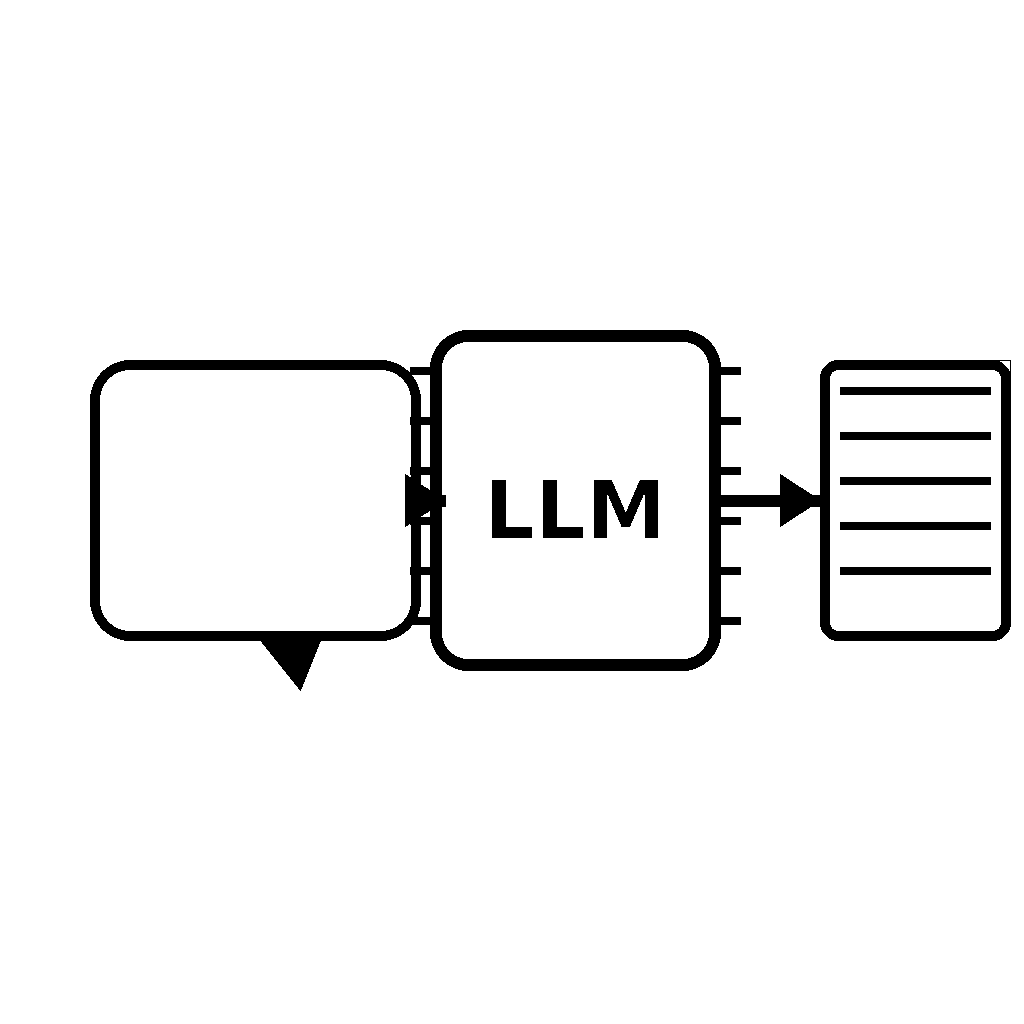

LLM(Large Language Model): A large transformer-based NN trained on vast text/code to predict the next token; examples power chatbots and code assistants.

How GenAI works (in plain English)

It’s industrial-strength autocomplete. The model learns patterns from lots of examples, then predicts the next piece (a word/token, a pixel patch, an audio chunk) over and over until it’s done.

1) Pre-training

- The model (learned mathematical function) ingests huge datasets and learns to predict what comes next.

- Text models learn next-token prediction; image/video models often learn to remove noise step-by-step (diffusion) to form a picture or frame.

2) Fine-tuning / Instruction-tuning (optional)

- After pre-training, the model is refined to follow instructions, adopt safer/helpful behavior, or specialize in a domain (e.g., coding, medicine).

3) Inference (when you use it)

- You provide a prompt → the model converts it to tokens → it predicts one token at a time, appending each new token back into the context, until a stop signal or length limit.

- For images, you provide a text prompt and the model iteratively guides noise into an image that matches the prompt.

An analogy to make it stick: prediction, not creation

Think of GenAI as a supercharged pattern-predictor:Astrology-style analogy (with a caveat).

An astrologer synthesizes many data points and past patterns to predict an outcome. Similarly, a GenAI model looks at countless data patterns and predicts the most likely continuation. Important difference: astrology is not evidence-based, while GenAI uses statistical learning optimized to reduce error on real data.

Popular Use Cases (and how they’re evolving)

- Writing & knowledge work: It drafts, edits, summarizes, and translates. It’s now adding grounded answers via retrieval (RAG) and structured outputs for automation.

- Coding: It completes code, refactors, and generates tests. Next up: tool-using agents that run code, read logs, and suggest fixes.

- Design & media: It creates images, explores layouts, and builds video storyboards. It’s moving toward multimodal pipelines from text → image → video.

- Customer experience: It powers chatbots, support summaries, and email replies. These are becoming actionable—booking meetings or creating tickets—with guardrails.

- Data & analytics: It writes SQL, makes charts, and drafts reports. The trend is toward conversational BI and agentic workflows on enterprise data.

- Education & training: It delivers personalized explanations, practice problems, and feedback, and is evolving into adaptive tutoring.

Strengths and Limits

Strengths

- Speed: Generates content fast and helps you explore ideas.

- Accessibility: Lowers the barrier to quality writing, design, and analysis.

- Practicality: Great for drafts, brainstorming, and automation.

Limits

- Hallucinations: Can produce confident but incorrect statements or citations.

- Drift:

- Data/Concept drift: As your data or environment changes, quality can drop without monitoring and updates.

- Session drift: In long chats, tone or facts can shift.

- Bias & fairness: Reflects biases present in the training data.

The Takeaway

GenAI isn’t magic—it’s prediction you can steer. With clear prompts, simple guardrails, and a quick human check, it becomes a daily force-multiplier for writing, coding, and design—the kind of practical tech we break down at TechUnder5.